Warning “esx.problem.hyperthreading.unmitigated” after installing ESXi patches. This warning may appear after installing patches contained in release ESXi601 (14 Aug 2018) and you have not updated your vCenter Server. XXX esx.problem.hyperthreading.unmitigated.formatOnHost not found XXX (Build 9313334). Standard VMware ESXi ISO, is the easiest and most reliable way to install ESXi on HPE servers.It includes all of the required drivers and management software to run ESXi on HPE servers, and works seamlessly with Intelligent Provisioning.

- Esx Problem Hyperthreading Unmitigated Formatonhost Not Found Within

- Esxi 5.5 Esx.problem.hyperthreading.unmitigated.formatonhost Not Found

Upgraded one of our ESXi hosts with the latest patches released today that are aimed at fixing the L1 Terminal Fault issues. After that the host started giving this warning: esx.problem.hyperthreading.unmitigated. No idea what it’s supposed to mean!

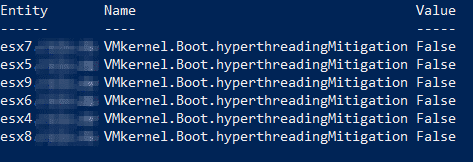

Went to Configure > Settings > Advanced System Settings and searched for anything with “hyperthread” in it. Found VMkernel.Boot.hyperthreadingMitigation, which was set to “false” but sounded suspiciously similar to the warning I had. Changed it to “true”, rebooted the host, and Googled on this setting to come across this KB article. It’s a good read but here’s some excerpts if you are interested in only the highlights:

Esx Problem Hyperthreading Unmitigated Formatonhost Not Found Within

Like Meltdown, Rogue System Register Read, and “Lazy FP state restore”, the “L1 Terminal Fault” vulnerability can occur when affected Intel microprocessors speculate beyond an unpermitted data access. By continuing the speculation in these cases, the affected Intel microprocessors expose a new side-channel for attack. (Note, however, that architectural correctness is still provided as the speculative operations will be later nullified at instruction retirement.)

CVE-2018-3646 is one of these Intel microprocessor vulnerabilities and impacts hypervisors. It may allow a malicious VM running on a given CPU core to effectively infer contents of the hypervisor’s or another VM’s privileged information residing at the same time in the same core’s L1 Data cache. Because current Intel processors share the physically-addressed L1 Data Cache across both logical processors of a Hyperthreading (HT) enabled core, indiscriminate simultaneous scheduling of software threads on both logical processors creates the potential for further information leakage. CVE-2018-3646 has two currently known attack vectors which will be referred to here as “Sequential-Context” and “Concurrent-Context.” Both attack vectors must be addressed to mitigate CVE-2018-3646..

Attack Vector Summary

- Sequential-context attack vector: a malicious VM can potentially infer recently accessed L1 data of a previous context (hypervisor thread or other VM thread) on either logical processor of a processor core.

- Concurrent-context attack vector: a malicious VM can potentially infer recently accessed L1 data of a concurrently executing context (hypervisor thread or other VM thread) on the other logical processor of the hyperthreading-enabled processor core.

13 votes, 25 comments. We have some new ESXi 6.7 hosts that are showing the message 'esx.problem.hyperthreading.unmitigated' after applying the. For whatever reason, performance took a hit and cause the ESXi host to freeze. VCenter is hosted as a VM server in ESXi. I cold-booted the host, and when it powered back on 90% of my VMs were 'inaccessible.' I tried removing them from inventory, browsing the datastore, and adding them to inventory, but that option was not available/greyed out.

Mitigation Summary

- Mitigation of the Sequential-Context attack vector is achieved by vSphere updates and patches. This mitigation is enabled by default and does not impose a significant performance impact. Please see resolution section for details.

- Mitigation of the Concurrent-context attack vector requires enablement of a new feature known as the ESXi Side-Channel-Aware Scheduler. The initial version of this feature will only schedule the hypervisor and VMs on one logical processor of an Intel Hyperthreading-enabled core. This feature may impose a non-trivial performance impact and is not enabled by default.

So that’s what the warning was about. To enable the ESXi Side Channel Aware scheduler we need to set the key above to “true”. More excerpts:

The Concurrent-context attack vector is mitigated through enablement of the ESXi Side-Channel-Aware Scheduler which is included in the updates and patches listed in VMSA-2018-0020. This scheduler is not enabled by default. Enablement of this scheduler may impose a non-trivial performance impact on applications running in a vSphere environment. The goal of the Planning Phase is to understand if your current environment has sufficient CPU capacity to enable the scheduler without operational impact.

Esxi 5.5 Esx.problem.hyperthreading.unmitigated.formatonhost Not Found

The following list summarizes potential problem areas after enabling the ESXi Side-Channel-Aware Scheduler:

- VMs configured with vCPUs greater than the physical cores available on the ESXi host

- VMs configured with custom affinity or NUMA settings

- VMs with latency-sensitive configuration

- ESXi hosts with Average CPU Usage greater than 70%

- Hosts with custom CPU resource management options enabled

- HA Clusters where a rolling upgrade will increase Average CPU Usage above 100%

Note: It may be necessary to acquire additional hardware, or rebalance existing workloads, before enablement of the ESXi Side-Channel-Aware Scheduler. Organizations can choose not to enable the ESXi Side-Channel-Aware Scheduler after performing a risk assessment and accepting the risk posed by the Concurrent-context attack vector. This is NOT RECOMMENDED and VMware cannot make this decision on behalf of an organization.

So to fix the second issue we need to enable the new scheduler. That can have a performance hit, so best to enable it manually so you are aware and can keep an eye on the load and performance hits. Also, if you are not in a shared environment and don’t care, you don’t need to enable it either. Makes sense.

That warning message could have been a bit more verbose though! :)

Lately I have been writing on a variety of topics regarding the use of VOBs (VMkernel Observations) for creating useful vCenter Alarms such as:

I figure it would also be useful to collect a list of all the vSphere VOBs, at least from what I can gather by looking at /usr/lib/vmware/hostd/extensions/hostdiag/locale/en/event.vmsg on the latest version of ESXi. The list below is quite extensive, there are a total of 308 vSphere VOBs not including the VSAN VOBs in my previous articles. For those those of you who use vSphere Replication, you may also find a couple of handy ones in the list.

| VOB ID | VOB Description |

|---|---|

| ad.event.ImportCertEvent | Import certificate success |

| ad.event.ImportCertFailedEvent | Import certificate failure |

| ad.event.JoinDomainEvent | Join domain success |

| ad.event.JoinDomainFailedEvent | Join domain failure |

| ad.event.LeaveDomainEvent | Leave domain success |

| ad.event.LeaveDomainFailedEvent | Leave domain failure |

| com.vmware.vc.HA.CreateConfigVvolFailedEvent | vSphere HA failed to create a configuration vVol for this datastore and so will not be able to protect virtual machines on the datastore until the problem is resolved. Error: {fault} |

| com.vmware.vc.HA.CreateConfigVvolSucceededEvent | vSphere HA successfully created a configuration vVol after the previous failure |

| com.vmware.vc.HA.DasHostCompleteDatastoreFailureEvent | Host complete datastore failure |

| com.vmware.vc.HA.DasHostCompleteNetworkFailureEvent | Host complete network failure |

| com.vmware.vc.VmCloneFailedInvalidDestinationEvent | Cannot complete virtual machine clone. |

| com.vmware.vc.VmCloneToResourcePoolFailedEvent | Cannot complete virtual machine clone. |

| com.vmware.vc.VmDiskConsolidatedEvent | Virtual machine disks consolidation succeeded. |

| com.vmware.vc.VmDiskConsolidationNeeded | Virtual machine disks consolidation needed. |

| com.vmware.vc.VmDiskConsolidationNoLongerNeeded | Virtual machine disks consolidation no longer needed. |

| com.vmware.vc.VmDiskFailedToConsolidateEvent | Virtual machine disks consolidation failed. |

| com.vmware.vc.datastore.UpdateVmFilesFailedEvent | Failed to update VM files |

| com.vmware.vc.datastore.UpdatedVmFilesEvent | Updated VM files |

| com.vmware.vc.datastore.UpdatingVmFilesEvent | Updating VM Files |

| com.vmware.vc.ft.VmAffectedByDasDisabledEvent | Fault Tolerance VM restart disabled |

| com.vmware.vc.guestOperations.GuestOperation | Guest operation |

| com.vmware.vc.guestOperations.GuestOperationAuthFailure | Guest operation authentication failure |

| com.vmware.vc.host.clear.vFlashResource.inaccessible | Host's virtual flash resource is accessible. |

| com.vmware.vc.host.clear.vFlashResource.reachthreshold | Host's virtual flash resource usage dropped below the threshold. |

| com.vmware.vc.host.problem.vFlashResource.inaccessible | Host's virtual flash resource is inaccessible. |

| com.vmware.vc.host.problem.vFlashResource.reachthreshold | Host's virtual flash resource usage exceeds the threshold. |

| com.vmware.vc.host.vFlash.VFlashResourceCapacityExtendedEvent | Virtual flash resource capacity is extended |

| com.vmware.vc.host.vFlash.VFlashResourceConfiguredEvent | Virtual flash resource is configured on the host |

| com.vmware.vc.host.vFlash.VFlashResourceRemovedEvent | Virtual flash resource is removed from the host |

| com.vmware.vc.host.vFlash.defaultModuleChangedEvent | Default virtual flash module is changed to {vFlashModule} on the host |

| com.vmware.vc.host.vFlash.modulesLoadedEvent | Virtual flash modules are loaded or reloaded on the host |

| com.vmware.vc.npt.VmAdapterEnteredPassthroughEvent | Virtual NIC entered passthrough mode |

| com.vmware.vc.npt.VmAdapterExitedPassthroughEvent | Virtual NIC exited passthrough mode |

| com.vmware.vc.vcp.FtDisabledVmTreatAsNonFtEvent | FT Disabled VM protected as non-FT VM |

| com.vmware.vc.vcp.FtFailoverEvent | Failover FT VM due to component failure |

| com.vmware.vc.vcp.FtFailoverFailedEvent | FT VM failover failed |

| com.vmware.vc.vcp.FtSecondaryRestartEvent | Restarting FT secondary due to component failure |

| com.vmware.vc.vcp.FtSecondaryRestartFailedEvent | FT secondary VM restart failed |

| com.vmware.vc.vcp.NeedSecondaryFtVmTreatAsNonFtEvent | Need secondary VM protected as non-FT VM |

| com.vmware.vc.vcp.TestEndEvent | VM Component Protection test ends |

| com.vmware.vc.vcp.TestStartEvent | VM Component Protection test starts |

| com.vmware.vc.vcp.VcpNoActionEvent | No action on VM |

| com.vmware.vc.vcp.VmDatastoreFailedEvent | Virtual machine lost datastore access |

| com.vmware.vc.vcp.VmNetworkFailedEvent | Virtual machine lost VM network accessibility |

| com.vmware.vc.vcp.VmPowerOffHangEvent | VM power off hang |

| com.vmware.vc.vcp.VmRestartEvent | Restarting VM due to component failure |

| com.vmware.vc.vcp.VmRestartFailedEvent | Virtual machine affected by component failure failed to restart |

| com.vmware.vc.vcp.VmWaitForCandidateHostEvent | No candidate host to restart |

| com.vmware.vc.vm.VmStateFailedToRevertToSnapshot | Failed to revert the virtual machine state to a snapshot |

| com.vmware.vc.vm.VmStateRevertedToSnapshot | The virtual machine state has been reverted to a snapshot |

| com.vmware.vc.vmam.AppMonitoringNotSupported | Application Monitoring Is Not Supported |

| com.vmware.vc.vmam.VmAppHealthMonitoringStateChangedEvent | vSphere HA detected application heartbeat status change |

| com.vmware.vc.vmam.VmAppHealthStateChangedEvent | vSphere HA detected application state change |

| com.vmware.vc.vmam.VmDasAppHeartbeatFailedEvent | vSphere HA detected application heartbeat failure |

| esx.audit.agent.hostd.started | VMware Host Agent started |

| esx.audit.agent.hostd.stopped | VMware Host Agent stopped |

| esx.audit.dcui.defaults.factoryrestore | Restoring factory defaults through DCUI. |

| esx.audit.dcui.disabled | The DCUI has been disabled. |

| esx.audit.dcui.enabled | The DCUI has been enabled. |

| esx.audit.dcui.host.reboot | Rebooting host through DCUI. |

| esx.audit.dcui.host.shutdown | Shutting down host through DCUI. |

| esx.audit.dcui.hostagents.restart | Restarting host agents through DCUI. |

| esx.audit.dcui.login.failed | Login authentication on DCUI failed |

| esx.audit.dcui.login.passwd.changed | DCUI login password changed. |

| esx.audit.dcui.network.factoryrestore | Factory network settings restored through DCUI. |

| esx.audit.dcui.network.restart | Restarting network through DCUI. |

| esx.audit.esxcli.host.poweroff | Powering off host through esxcli |

| esx.audit.esxcli.host.reboot | Rebooting host through esxcli |

| esx.audit.esximage.hostacceptance.changed | Host acceptance level changed |

| esx.audit.esximage.install.novalidation | Attempting to install an image profile with validation disabled. |

| esx.audit.esximage.install.securityalert | SECURITY ALERT: Installing image profile. |

| esx.audit.esximage.profile.install.successful | Successfully installed image profile. |

| esx.audit.esximage.profile.update.successful | Successfully updated host to new image profile. |

| esx.audit.esximage.vib.install.successful | Successfully installed VIBs. |

| esx.audit.esximage.vib.remove.successful | Successfully removed VIBs |

| esx.audit.host.boot | Host has booted. |

| esx.audit.host.maxRegisteredVMsExceeded | The number of virtual machines registered on the host exceeded limit. |

| esx.audit.host.stop.reboot | Host is rebooting. |

| esx.audit.host.stop.shutdown | Host is shutting down. |

| esx.audit.lockdownmode.disabled | Administrator access to the host has been enabled. |

| esx.audit.lockdownmode.enabled | Administrator access to the host has been disabled. |

| esx.audit.maintenancemode.canceled | The host has canceled entering maintenance mode. |

| esx.audit.maintenancemode.entered | The host has entered maintenance mode. |

| esx.audit.maintenancemode.entering | The host has begun entering maintenance mode. |

| esx.audit.maintenancemode.exited | The host has exited maintenance mode. |

| esx.audit.net.firewall.config.changed | Firewall configuration has changed. |

| esx.audit.net.firewall.disabled | Firewall has been disabled. |

| esx.audit.net.firewall.enabled | Firewall has been enabled for port. |

| esx.audit.net.firewall.port.hooked | Port is now protected by Firewall. |

| esx.audit.net.firewall.port.removed | Port is no longer protected with Firewall. |

| esx.audit.net.lacp.disable | LACP disabled |

| esx.audit.net.lacp.enable | LACP eabled |

| esx.audit.net.lacp.uplink.connected | uplink is connected |

| esx.audit.shell.disabled | The ESXi command line shell has been disabled. |

| esx.audit.shell.enabled | The ESXi command line shell has been enabled. |

| esx.audit.ssh.disabled | SSH access has been disabled. |

| esx.audit.ssh.enabled | SSH access has been enabled. |

| esx.audit.usb.config.changed | USB configuration has changed. |

| esx.audit.uw.secpolicy.alldomains.level.changed | Enforcement level changed for all security domains. |

| esx.audit.uw.secpolicy.domain.level.changed | Enforcement level changed for security domain. |

| esx.audit.vmfs.lvm.device.discovered | LVM device discovered. |

| esx.audit.vmfs.volume.mounted | File system mounted. |

| esx.audit.vmfs.volume.umounted | LVM volume un-mounted. |

| esx.clear.coredump.configured | A vmkcore disk partition is available and/or a network coredump server has been configured. Host core dumps will be saved. |

| esx.clear.coredump.configured2 | At least one coredump target has been configured. Host core dumps will be saved. |

| esx.clear.net.connectivity.restored | Restored network connectivity to portgroups |

| esx.clear.net.dvport.connectivity.restored | Restored Network Connectivity to DVPorts |

| esx.clear.net.dvport.redundancy.restored | Restored Network Redundancy to DVPorts |

| esx.clear.net.lacp.lag.transition.up | lag transition up |

| esx.clear.net.lacp.uplink.transition.up | uplink transition up |

| esx.clear.net.lacp.uplink.unblocked | uplink is unblocked |

| esx.clear.net.redundancy.restored | Restored uplink redundancy to portgroups |

| esx.clear.net.vmnic.linkstate.up | Link state up |

| esx.clear.scsi.device.io.latency.improved | Scsi Device I/O Latency has improved |

| esx.clear.scsi.device.state.on | Device has been turned on administratively. |

| esx.clear.scsi.device.state.permanentloss.deviceonline | Device that was permanently inaccessible is now online. |

| esx.clear.storage.apd.exit | Exited the All Paths Down state |

| esx.clear.storage.connectivity.restored | Restored connectivity to storage device |

| esx.clear.storage.redundancy.restored | Restored path redundancy to storage device |

| esx.problem.3rdParty.error | A 3rd party component on ESXi has reported an error. |

| esx.problem.3rdParty.information | A 3rd party component on ESXi has reported an informational event. |

| esx.problem.3rdParty.warning | A 3rd party component on ESXi has reported a warning. |

| esx.problem.apei.bert.memory.error.corrected | A corrected memory error occurred |

| esx.problem.apei.bert.memory.error.fatal | A fatal memory error occurred |

| esx.problem.apei.bert.memory.error.recoverable | A recoverable memory error occurred |

| esx.problem.apei.bert.pcie.error.corrected | A corrected PCIe error occurred |

| esx.problem.apei.bert.pcie.error.fatal | A fatal PCIe error occurred |

| esx.problem.apei.bert.pcie.error.recoverable | A recoverable PCIe error occurred |

| esx.problem.application.core.dumped | An application running on ESXi host has crashed and a core file was created. |

| esx.problem.boot.filesystem.down | Lost connectivity to the device backing the boot filesystem |

| esx.problem.coredump.capacity.insufficient | The storage capacity of the coredump targets is insufficient to capture a complete coredump. |

| esx.problem.coredump.unconfigured | No vmkcore disk partition is available and no network coredump server has been configured. Host core dumps cannot be saved. |

| esx.problem.coredump.unconfigured2 | No coredump target has been configured. Host core dumps cannot be saved. |

| esx.problem.cpu.amd.mce.dram.disabled | DRAM ECC not enabled. Please enable it in BIOS. |

| esx.problem.cpu.intel.ioapic.listing.error | Not all IO-APICs are listed in the DMAR. Not enabling interrupt remapping on this platform. |

| esx.problem.cpu.mce.invalid | MCE monitoring will be disabled as an unsupported CPU was detected. Please consult the ESX HCL for information on supported hardware. |

| esx.problem.cpu.smp.ht.invalid | Disabling HyperThreading due to invalid configuration: Number of threads: {1} Number of PCPUs: {2}. |

| esx.problem.cpu.smp.ht.numpcpus.max | Found {1} PCPUs but only using {2} of them due to specified limit. |

| esx.problem.cpu.smp.ht.partner.missing | Disabling HyperThreading due to invalid configuration: HT partner {1} is missing from PCPU {2}. |

| esx.problem.dhclient.lease.none | Unable to obtain a DHCP lease. |

| esx.problem.dhclient.lease.offered.noexpiry | No expiry time on offered DHCP lease. |

| esx.problem.esximage.install.error | Could not install image profile. |

| esx.problem.esximage.install.invalidhardware | Host doesn't meet image profile hardware requirements. |

| esx.problem.esximage.install.stage.error | Could not stage image profile. |

| esx.problem.hardware.acpi.interrupt.routing.device.invalid | Skipping interrupt routing entry with bad device number: {1}. This is a BIOS bug. |

| esx.problem.hardware.acpi.interrupt.routing.pin.invalid | Skipping interrupt routing entry with bad device pin: {1}. This is a BIOS bug. |

| esx.problem.hardware.ioapic.missing | IOAPIC Num {1} is missing. Please check BIOS settings to enable this IOAPIC. |

| esx.problem.host.coredump | An unread host kernel core dump has been found. |

| esx.problem.hostd.core.dumped | Hostd crashed and a core file was created. |

| esx.problem.iorm.badversion | Storage I/O Control version mismatch |

| esx.problem.iorm.nonviworkload | Unmanaged workload detected on SIOC-enabled datastore |

| esx.problem.migrate.vmotion.default.heap.create.failed | Failed to create default migration heap |

| esx.problem.migrate.vmotion.server.pending.cnx.listen.socket.shutdown | Error with migration listen socket |

| esx.problem.net.connectivity.lost | Lost Network Connectivity |

| esx.problem.net.dvport.connectivity.lost | Lost Network Connectivity to DVPorts |

| esx.problem.net.dvport.redundancy.degraded | Network Redundancy Degraded on DVPorts |

| esx.problem.net.dvport.redundancy.lost | Lost Network Redundancy on DVPorts |

| esx.problem.net.e1000.tso6.notsupported | No IPv6 TSO support |

| esx.problem.net.fence.port.badfenceid | Invalid fenceId configuration on dvPort |

| esx.problem.net.fence.resource.limited | Maximum number of fence networks or ports |

| esx.problem.net.fence.switch.unavailable | Switch fence property is not set |

| esx.problem.net.firewall.config.failed | Firewall configuration operation failed. The changes were not applied. |

| esx.problem.net.firewall.port.hookfailed | Adding port to Firewall failed. |

| esx.problem.net.gateway.set.failed | Failed to set gateway |

| esx.problem.net.heap.belowthreshold | Network memory pool threshold |

| esx.problem.net.lacp.lag.transition.down | lag transition down |

| esx.problem.net.lacp.peer.noresponse | No peer response |

| esx.problem.net.lacp.policy.incompatible | Current teaming policy is incompatible |

| esx.problem.net.lacp.policy.linkstatus | Current teaming policy is incompatible |

| esx.problem.net.lacp.uplink.blocked | uplink is blocked |

| esx.problem.net.lacp.uplink.disconnected | uplink is disconnected |

| esx.problem.net.lacp.uplink.fail.duplex | uplink duplex mode is different |

| esx.problem.net.lacp.uplink.fail.speed | uplink speed is different |

| esx.problem.net.lacp.uplink.inactive | All uplinks must be active |

| esx.problem.net.lacp.uplink.transition.down | uplink transition down |

| esx.problem.net.migrate.bindtovmk | Invalid vmknic specified in /Migrate/Vmknic |

| esx.problem.net.migrate.unsupported.latency | Unsupported vMotion network latency detected |

| esx.problem.net.portset.port.full | Failed to apply for free ports |

| esx.problem.net.portset.port.vlan.invalidid | Vlan ID of the port is invalid |

| esx.problem.net.proxyswitch.port.unavailable | Virtual NIC connection to switch failed |

| esx.problem.net.redundancy.degraded | Network Redundancy Degraded |

| esx.problem.net.redundancy.lost | Lost Network Redundancy |

| esx.problem.net.uplink.mtu.failed | Failed to set MTU on an uplink |

| esx.problem.net.vmknic.ip.duplicate | A duplicate IP address was detected on a vmknic interface |

| esx.problem.net.vmnic.linkstate.down | Link state down |

| esx.problem.net.vmnic.linkstate.flapping | Link state unstable |

| esx.problem.net.vmnic.watchdog.reset | Nic Watchdog Reset |

| esx.problem.ntpd.clock.correction.error | NTP daemon stopped. Time correction out of bounds. |

| esx.problem.pageretire.platform.retire.request | Memory page retirement requested by platform firmware. |

| esx.problem.pageretire.selectedmpnthreshold.host.exceeded | Number of host physical memory pages selected for retirement exceeds threshold. |

| esx.problem.scratch.partition.size.small | Size of scratch partition is too small. |

| esx.problem.scratch.partition.unconfigured | No scratch partition has been configured. |

| esx.problem.scsi.apd.event.descriptor.alloc.failed | No memory to allocate APD Event |

| esx.problem.scsi.device.close.failed | Scsi Device close failed. |

| esx.problem.scsi.device.detach.failed | Device detach failed |

| esx.problem.scsi.device.filter.attach.failed | Failed to attach filter to device. |

| esx.problem.scsi.device.io.bad.plugin.type | Plugin trying to issue command to device does not have a valid storage plugin type. |

| esx.problem.scsi.device.io.inquiry.failed | Failed to obtain INQUIRY data from the device |

| esx.problem.scsi.device.io.invalid.disk.qfull.value | Scsi device queue parameters incorrectly set. |

| esx.problem.scsi.device.io.latency.high | Scsi Device I/O Latency going high |

| esx.problem.scsi.device.io.qerr.change.config | QErr cannot be changed on device. Please change it manually on the device if possible. |

| esx.problem.scsi.device.io.qerr.changed | Scsi Device QErr setting changed |

| esx.problem.scsi.device.is.local.failed | Plugin's isLocal entry point failed |

| esx.problem.scsi.device.is.pseudo.failed | Plugin's isPseudo entry point failed |

| esx.problem.scsi.device.is.ssd.failed | Plugin's isSSD entry point failed |

| esx.problem.scsi.device.limitreached | Maximum number of storage devices |

| esx.problem.scsi.device.state.off | Device has been turned off administratively. |

| esx.problem.scsi.device.state.permanentloss | Device has been removed or is permanently inaccessible. |

| esx.problem.scsi.device.state.permanentloss.noopens | Permanently inaccessible device has no more opens. |

| esx.problem.scsi.device.state.permanentloss.pluggedback | Device has been plugged back in after being marked permanently inaccessible. |

| esx.problem.scsi.device.state.permanentloss.withreservationheld | Device has been removed or is permanently inaccessible. |

| esx.problem.scsi.device.thinprov.atquota | Thin Provisioned Device Nearing Capacity |

| esx.problem.scsi.scsipath.badpath.unreachpe | vVol PE path going out of vVol-incapable adapter |

| esx.problem.scsi.scsipath.badpath.unsafepe | Cannot safely determine vVol PE |

| esx.problem.scsi.scsipath.limitreached | Maximum number of storage paths |

| esx.problem.scsi.unsupported.plugin.type | Storage plugin of unsupported type tried to register. |

| esx.problem.storage.apd.start | All paths are down |

| esx.problem.storage.apd.timeout | All Paths Down timed out, I/Os will be fast failed |

| esx.problem.storage.connectivity.devicepor | Frequent PowerOn Reset Unit Attention of Storage Path |

| esx.problem.storage.connectivity.lost | Lost Storage Connectivity |

| esx.problem.storage.connectivity.pathpor | Frequent PowerOn Reset Unit Attention of Storage Path |

| esx.problem.storage.connectivity.pathstatechanges | Frequent State Changes of Storage Path |

| esx.problem.storage.iscsi.discovery.connect.error | iSCSI discovery target login connection problem |

| esx.problem.storage.iscsi.discovery.login.error | iSCSI Discovery target login error |

| esx.problem.storage.iscsi.isns.discovery.error | iSCSI iSns Discovery error |

| esx.problem.storage.iscsi.target.connect.error | iSCSI Target login connection problem |

| esx.problem.storage.iscsi.target.login.error | iSCSI Target login error |

| esx.problem.storage.iscsi.target.permanently.lost | iSCSI target permanently removed |

| esx.problem.storage.redundancy.degraded | Degraded Storage Path Redundancy |

| esx.problem.storage.redundancy.lost | Lost Storage Path Redundancy |

| esx.problem.syslog.config | System logging is not configured. |

| esx.problem.syslog.nonpersistent | System logs are stored on non-persistent storage. |

| esx.problem.vfat.filesystem.full.other | A VFAT filesystem is full. |

| esx.problem.vfat.filesystem.full.scratch | A VFAT filesystem, being used as the host's scratch partition, is full. |

| esx.problem.visorfs.failure | An operation on the root filesystem has failed. |

| esx.problem.visorfs.inodetable.full | The root filesystem's file table is full. |

| esx.problem.visorfs.ramdisk.full | A ramdisk is full. |

| esx.problem.visorfs.ramdisk.inodetable.full | A ramdisk's file table is full. |

| esx.problem.vm.kill.unexpected.fault.failure | A VM could not fault in the a page. The VM is terminated as further progress is impossible. |

| esx.problem.vm.kill.unexpected.forcefulPageRetire | A VM did not respond to swap actions and is forcefully powered off to prevent system instability. |

| esx.problem.vm.kill.unexpected.noSwapResponse | A VM did not respond to swap actions and is forcefully powered off to prevent system instability. |

| esx.problem.vm.kill.unexpected.vmtrack | A VM is allocating too many pages while system is critically low in free memory. It is forcefully terminated to prevent system instability. |

| esx.problem.vmfs.ats.support.lost | Device Backing VMFS has lost ATS Support |

| esx.problem.vmfs.error.volume.is.locked | VMFS Locked By Remote Host |

| esx.problem.vmfs.extent.offline | Device backing an extent of a file system is offline. |

| esx.problem.vmfs.extent.online | Device backing an extent of a file system came online |

| esx.problem.vmfs.heartbeat.recovered | VMFS Volume Connectivity Restored |

| esx.problem.vmfs.heartbeat.timedout | VMFS Volume Connectivity Degraded |

| esx.problem.vmfs.heartbeat.unrecoverable | VMFS Volume Connectivity Lost |

| esx.problem.vmfs.journal.createfailed | No Space To Create VMFS Journal |

| esx.problem.vmfs.lock.corruptondisk | VMFS Lock Corruption Detected |

| esx.problem.vmfs.lock.corruptondisk.v2 | VMFS Lock Corruption Detected |

| esx.problem.vmfs.nfs.mount.connect.failed | Unable to connect to NFS server |

| esx.problem.vmfs.nfs.mount.limit.exceeded | NFS has reached the maximum number of supported volumes |

| esx.problem.vmfs.nfs.server.disconnect | Lost connection to NFS server |

| esx.problem.vmfs.nfs.server.restored | Restored connection to NFS server |

| esx.problem.vmfs.resource.corruptondisk | VMFS Resource Corruption Detected |

| esx.problem.vmsyslogd.remote.failure | Remote logging host has become unreachable. |

| esx.problem.vmsyslogd.storage.failure | Logging to storage has failed. |

| esx.problem.vmsyslogd.storage.logdir.invalid | The configured log directory cannot be used. The default directory will be used instead. |

| esx.problem.vmsyslogd.unexpected | Log daemon has failed for an unexpected reason. |

| esx.problem.vpxa.core.dumped | Vpxa crashed and a core file was created. |

| hbr.primary.AppQuiescedDeltaCompletedEvent | Application consistent delta completed. |

| hbr.primary.ConnectionRestoredToHbrServerEvent | Connection to VR Server restored. |

| hbr.primary.DeltaAbortedEvent | Delta aborted. |

| hbr.primary.DeltaCompletedEvent | Delta completed. |

| hbr.primary.DeltaStartedEvent | Delta started. |

| hbr.primary.FSQuiescedDeltaCompletedEvent | File system consistent delta completed. |

| hbr.primary.FSQuiescedSnapshot | Application quiescing failed during replication. |

| hbr.primary.FailedToStartDeltaEvent | Failed to start delta. |

| hbr.primary.FailedToStartSyncEvent | Failed to start full sync. |

| hbr.primary.HostLicenseFailedEvent | vSphere Replication is not licensed replication is disabled. |

| hbr.primary.InvalidDiskReplicationConfigurationEvent | Disk replication configuration is invalid. |

| hbr.primary.InvalidVmReplicationConfigurationEvent | Virtual machine replication configuration is invalid. |

| hbr.primary.NoConnectionToHbrServerEvent | No connection to VR Server. |

| hbr.primary.NoProgressWithHbrServerEvent | VR Server error: {[email protected]} |

| hbr.primary.QuiesceNotSupported | Quiescing is not supported for this virtual machine. |

| hbr.primary.SyncCompletedEvent | Full sync completed. |

| hbr.primary.SyncStartedEvent | Full sync started. |

| hbr.primary.SystemPausedReplication | System has paused replication. |

| hbr.primary.UnquiescedDeltaCompletedEvent | Delta completed. |

| hbr.primary.UnquiescedSnapshot | Unable to quiesce the guest. |

| hbr.primary.VmLicenseFailedEvent | vSphere Replication is not licensed replication is disabled. |

| hbr.primary.VmReplicationConfigurationChangedEvent | Replication configuration changed. |

| vim.event.LicenseDowngradedEvent | License downgrade |

| vim.event.SystemSwapInaccessible | System swap inaccessible |

| vim.event.UnsupportedHardwareVersionEvent | This virtual machine uses hardware version {version} which is no longer supported. Upgrade is recommended. |

| vprob.net.connectivity.lost | Lost Network Connectivity |

| vprob.net.e1000.tso6.notsupported | No IPv6 TSO support |

| vprob.net.migrate.bindtovmk | Invalid vmknic specified in /Migrate/Vmknic |

| vprob.net.proxyswitch.port.unavailable | Virtual NIC connection to switch failed |

| vprob.net.redundancy.degraded | Network Redundancy Degraded |

| vprob.net.redundancy.lost | Lost Network Redundancy |

| vprob.scsi.device.thinprov.atquota | Thin Provisioned Device Nearing Capacity |

| vprob.storage.connectivity.lost | Lost Storage Connectivity |

| vprob.storage.redundancy.degraded | Degraded Storage Path Redundancy |

| vprob.storage.redundancy.lost | Lost Storage Path Redundancy |

| vprob.vmfs.error.volume.is.locked | VMFS Locked By Remote Host |

| vprob.vmfs.extent.offline | Device backing an extent of a file system is offline. |

| vprob.vmfs.extent.online | Device backing an extent of a file system is online. |

| vprob.vmfs.heartbeat.recovered | VMFS Volume Connectivity Restored |

| vprob.vmfs.heartbeat.timedout | VMFS Volume Connectivity Degraded |

| vprob.vmfs.heartbeat.unrecoverable | VMFS Volume Connectivity Lost |

| vprob.vmfs.journal.createfailed | No Space To Create VMFS Journal |

| vprob.vmfs.lock.corruptondisk | VMFS Lock Corruption Detected |

| vprob.vmfs.nfs.server.disconnect | Lost connection to NFS server |

| vprob.vmfs.nfs.server.restored | Restored connection to NFS server |

| vprob.vmfs.resource.corruptondisk | VMFS Resource Corruption Detected |

More from my site